Transformation

Defines a struct ObjectProperty as follow:

| property | type | comment |

|---|---|---|

| rotation | vec3 | The object's rotation in its object center. |

| scale | vec4 / vec3 | The object's scale level in its object center. |

| translation | vec3 | The object's relative position to the origin (absolute position). |

| face | vec3 | Where the object look at. |

The code:

struct ObjectProperty

{

vec3 rotation;

vec4 scale;

vec3 translation;

vec3 face;

};

Object Transformation

Object Transformation Order:

-> Rotate -> Scale -> Translate

Rotate and scale around the center of the object, so that the order of these transformations will not influence the result.

The transformation matrix is:

struct ObjectProperty objProp;

mat4 m4ModelMatrix = translate(objProp.translation) * scale(objProp.scale) * rotate(objProp.rotation);

The transformation operations are:

vec4 v4PosInModel = m4ModelMatrix * vertex.position;

Where vertex.position is a vec4 object containing the position of the vertex.

World View Transformation

World View Transformation Order:

-> Rotate -> Translate

Rotate the camera first, and then translate the position. In this way, the view will always face towards the origin.

It seems identicial to the process of object transformation. However, since this output matrix left multiples the model matrix, it rotates after the object translation transformation.

The transformation matrix is:

struct ObjectProperty worldViewProp;

mat4 m4ViewMatrix = translate(worldViewProp.translation) * scale(worldViewProp.scale) * rotate(worldViewProp.rotation);

The transformation operations are:

vec4 v4PosInWorldView = m4ViewMatrix * v4PosInModel;

Viewer Camera Transformation

Viewer Camera Transformation Order:

-> Translate -> Rotate

First, translate the objects relative to the viewer position. That means to multiply the translation vector with a negative sign. Then, rotates the camera, which again means to rotate everything in the opposite of the viewer rotation.

The transformation matrix is:

struct ObjectProperty viewerProp;

mat4 m4ViewMatrix = rotate(-viewerProp.face) * translate(-viewerProp.translation);

The transformation operations are:

vec4 v4PosInViewerView = m4ViewMatrix * v4PosInModel;

NOTICE: You should only use either world view or viewer view at once.

Movement With Camera Rotation

Moving the camera means changing the translation of the view camera. It's easy in world view to keep front as the camera front. But in viewer view, when rotating is carry out after translating, we need to transform the movement translation.

Generally speaking, this is done by multipling the movement translation with the current camera rotation matrix.

bool bWorldView; // Whether it's world view or viewer view.

struct ObjectProperty viewerProp;

void MovementFunc(u_char key, int x, int y)

{

const float fTransSensitivity = 1.0f;

vec4 v4Movement = vec4(0.f, 0.f, 0.f, 1.f);

switch (key)

{

// Movement

case 'd': // right

v4Movement = vec4(fTransSensitivity, 0.0f, 0.0f, 1.0f);

break;

case 'a': // left

v4Movement = vec4(-fTransSensitivity, 0.0f, 0.0f, 1.0f);

break;

case 'q': // up

v4Movement = vec4(0.0f, fTransSensitivity, 0.0f, 1.0f);

break;

case 'e': // down

v4Movement = vec4(0.0f, -fTransSensitivity, 0.0f, 1.0f);

break;

case 's': // backward

v4Movement = vec4(0.0f, 0.0f, fTransSensitivity, 1.0f);

break;

case 'w': // forward

v4Movement = vec4(0.0f, 0.0f, -fTransSensitivity, 1.0f);

break;

}

if (bWorldView)

v3ViewTrans += v4Movement.xyz();

else {

mat4 m4Rot = rotateXYZ(viewerProp.face * 360.0f);

vec4 v4Offset = v4Movement * m4Rot;

objProp.translation += v4Offset.xyz();

}

}

Lighting

Calculate Vertices' Normal

Vertices in a triangle share the same normal as the triangle. But we know vertices are usually shared by adjacent triangles.

So the normal of a vertex should be the mean of the normals of all triangles that contain this vertex.

However, normals of these triangles may not be equally important. Same triangles have larger angles at the vertex, so they own larger weights. Assumes that we have a triangle with vertices A, B and C. The weights can be calculate using the following formula:

$$ \begin{align*} & norm = (B-A) \times (C-A) && \text{(Cross multiply)} \\ & weight = asin( \frac{ length(norm) }{ length(B-A) * length(C-A) } ) \end{align*} $$

The code:

bool calculateElementArrayNormals(donny::vector_view<const GLfloat> positions,

donny::vector_view<const GLushort> indices,

donny::vector_view<GLfloat> normals,

GLsizei stride,

GLuint restartInd)

{

using namespace donny;

using namespace vmath;

if (stride < 3) return false; // Failed

const int length = positions.size() / stride;

// Build vectors from float array

std::vector<vec3> v3Pos; v3Pos.resize(length);

for (int a = 0; a < v3Pos.size(); ++a)

{

v3Pos[a] = vec3(positions[a*stride],

positions[a*stride+1],

positions[a*stride+2]);

}

int sign = -1;

int ii = 0;

std::vector<vec3> v3Normal; v3Normal.resize(length);

for (vec3 &v3Norm : v3Normal) {

v3Norm = vec3(0.f, 0.f, 0.f);

}

while (ii + 2 < indices.size())

{

if (indices[ii] == restartInd || indices[ii+1] == restartInd || indices[ii+2] == restartInd) {

++ii;

sign = -1;

continue;

}

int inds[3] = { indices[ii], indices[ii+1], indices[ii+2] };

for (int &ind : inds) {

int p = (&ind - &inds[0]);

vec3 v = v3Pos[inds[(p+2)%3]] - v3Pos[inds[p]]; // vector begins with this vertex

vec3 u = v3Pos[inds[(p+1)%3]] - v3Pos[inds[p]]; // vector begins with this vertex

vec3 n = cross(v, u) * sign;

float sin_alpha = length(n) / (length(v) * length(u));

v3Normal[ind] += n * asin(sin_alpha);

}

++ii;

sign *= -1; // Change the sign since the order of next vertex changed.

// From clockwise to anti-clockwise or from anti-clockwise to clockwise.

}

// From vectors to float array

for (int a = 0; a < v3Pos.size(); ++a)

{

v3Normal[a] = normalize(v3Normal[a]);

normals[a*stride+0] = v3Normal[a][0];

normals[a*stride+1] = v3Normal[a][1];

normals[a*stride+2] = v3Normal[a][2];

if (stride == 4) normals[a*stride+3] = 1.0f;

}

return true;

}

Ambient

The ambient of light is quite easy, just multiply the color with the ambient vector - cause that's what ambient light should be, it's everywhere.

The code:

/* fragment shader */

in vec4 vs_fs_color; // input fragment color

out vec4 color; // output color

color = light_ambient * vs_fs_color;

Diffuse

To calculate the diffuse of light, we need to know whether the fragment is facing towards the light or not, and also the angle.

The code:

/* fragment shader */

in vec3 vs_fs_position; // input fragment position.

in vec3 vs_fs_normal; // input fragment normal.

/* ... */

// The input vs_fs_normal in the fragment may not be normal after interpolation.

vec3 normal = normalize(vs_fs_normal);

vec3 light_direction = normalize(light_position - vs_fs_position); // The direction of the light towards the fragment

float diff = max(0.0f, dot(light_direction, normal)); // The response of the light on the fragment

vec3 diffuse_effect = light_diffuse * diff;

Specular

To calculate the specular of light, we need to know the viewer's position, and determine whether the reflect of light goes directly to the viewer's eye.

The code:

/* fragment shader */

in vec3 vs_fs_position; // input fragment position.

in vec3 vs_fs_normal; // input fragment normal.

/* ... */

// The input vs_fs_normal in the fragment may not be normal after interpolation.

vec3 normal = normalize(vs_fs_normal);

// The direction of the viewer towards the fragment

vec3 view_direction = normalize(view_position - vs_fs_position);

// The reflect direction of the light by the fragment

vec3 reflect_direction = reflect(-light_direction, normal);

// If it's the back of the fragment that facing towards the light, there won't be any specular effect.

if (dot(normal, -light_direction) > 0.0f)

reflect_direction = vec3(0.0f, 0.0f, 0.0f);

// The response of the reflect light in the viewer position. Use pow to focus around certain point.

float spec = pow(max(0.0f, dot(view_direction, reflect_direction)), light_shininess);

vec3 specular_effect = light_specular * spec;

Final Effect

Add up the ambient effect, the diffuse effect and the specular effect, we get the final effect of light on the fragment.

/* fragment shader */

in vec4 vs_fs_color; // input fragment color

out vec4 color; // output color

/* ... */

vec3 final_effect = light_ambient + diffuse_effect + specular_effect;

color = final_effect * vs_fs_color;

Point Lights Attenuation

As we know, the effect of light usually decrease as they go farther. The following formula simulates this attenuation of light:

$$ attenuation = \frac{1.0}{ constant + linear * d + quadratic * d^2 } $$

It considers the linear term and the quadratic term of the attenuation, which are enough in most scene.

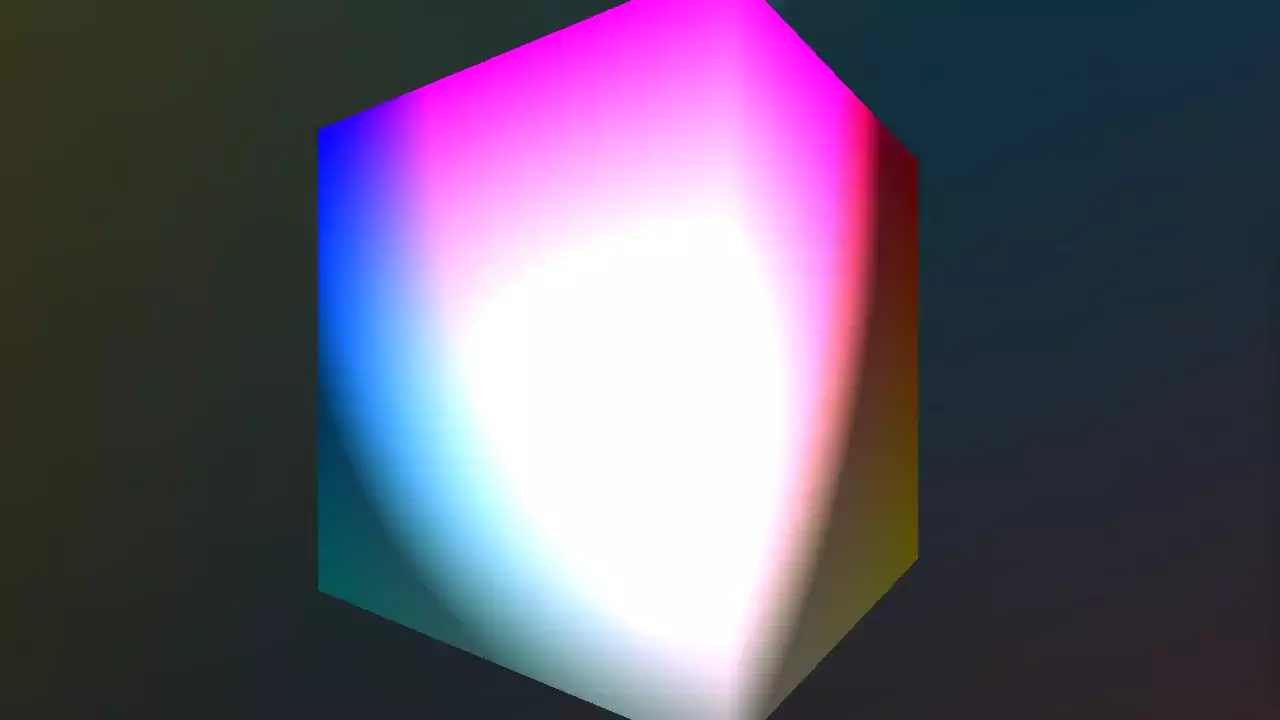

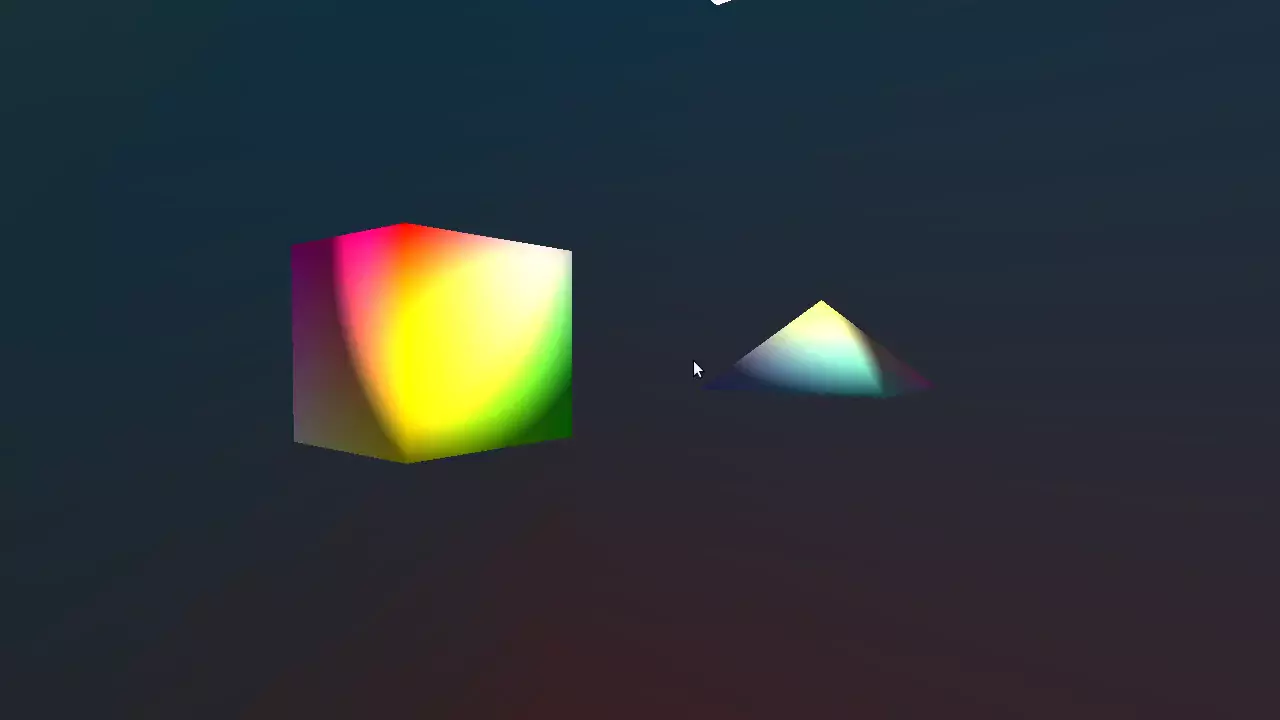

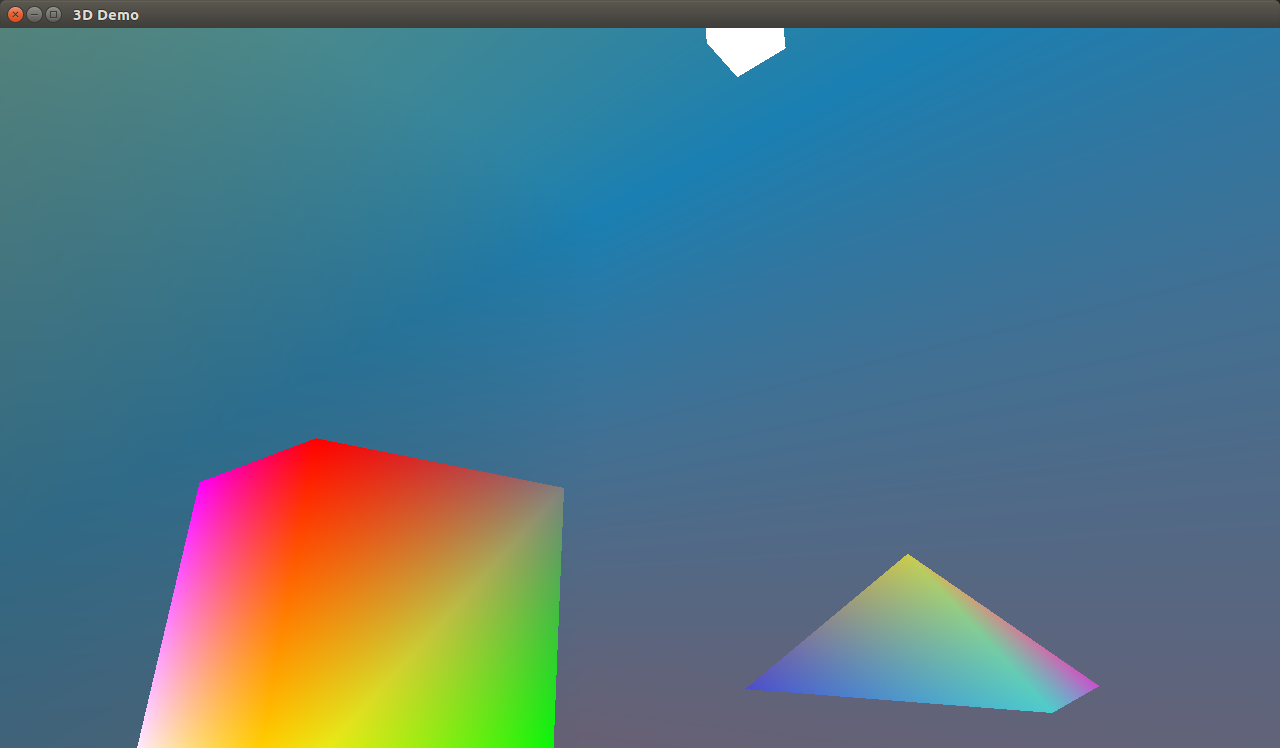

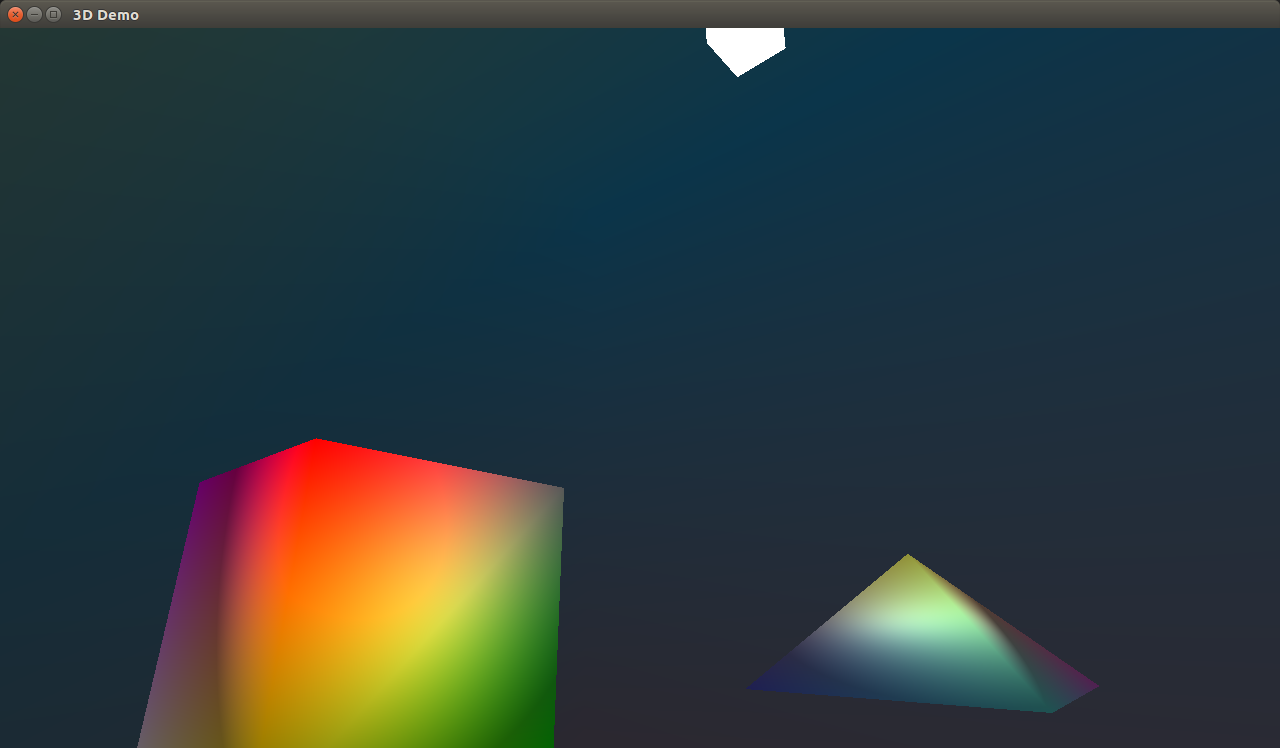

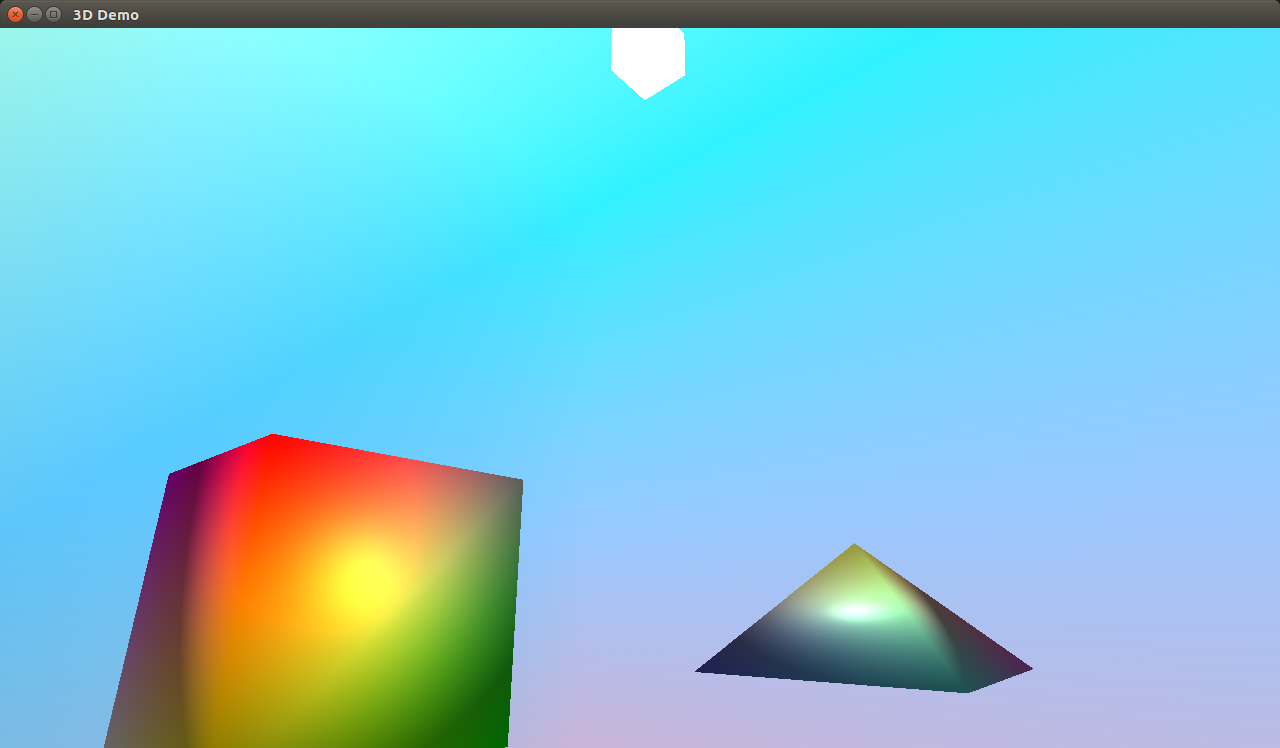

Light effect without attenuation:

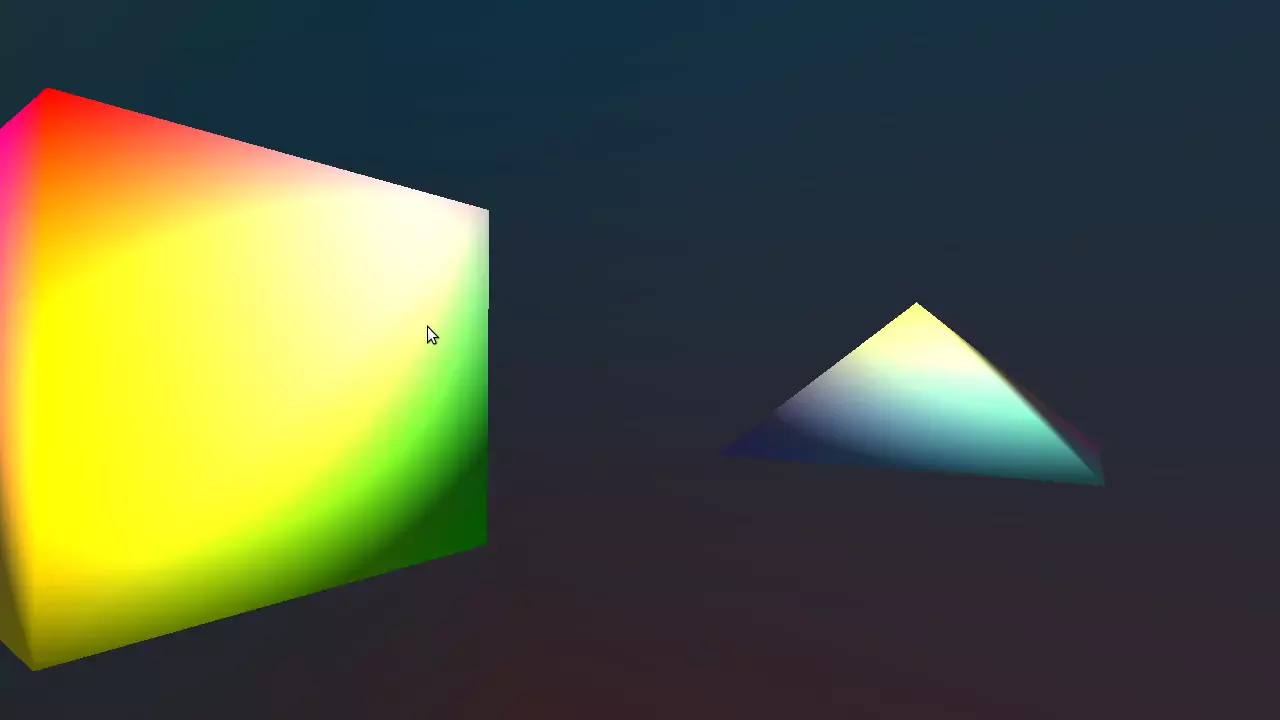

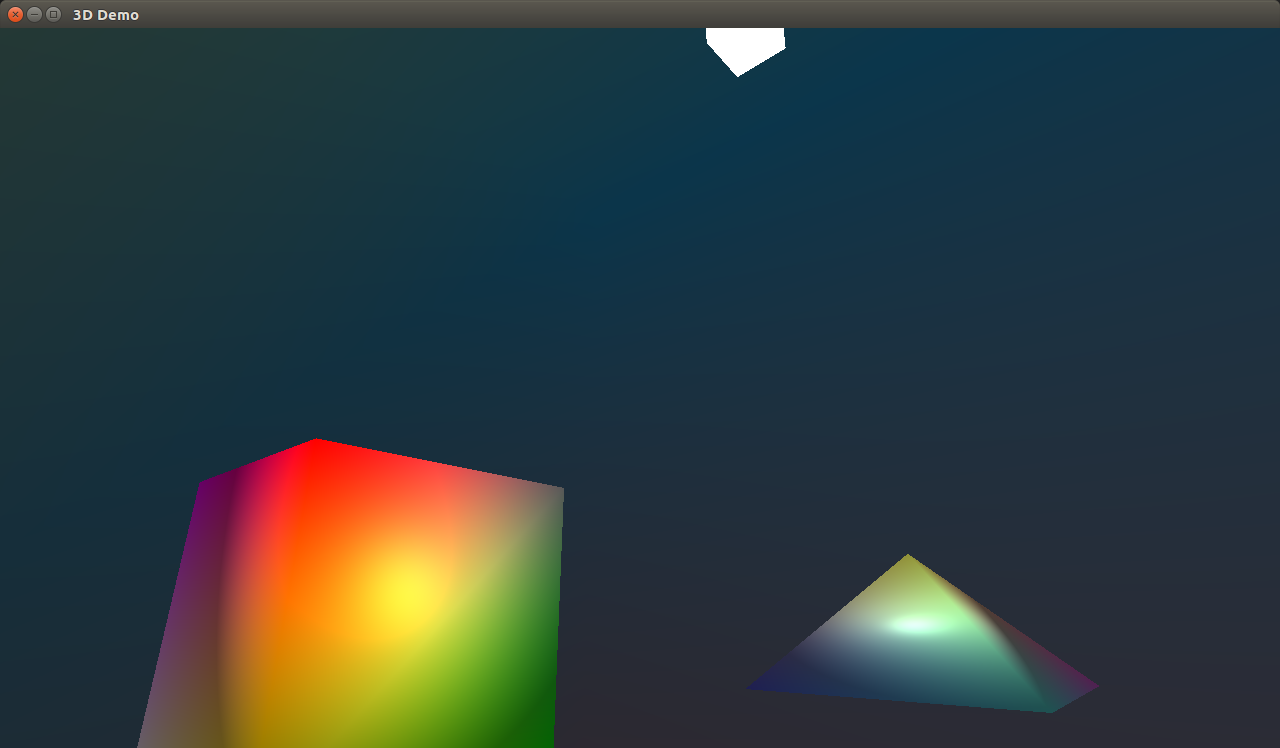

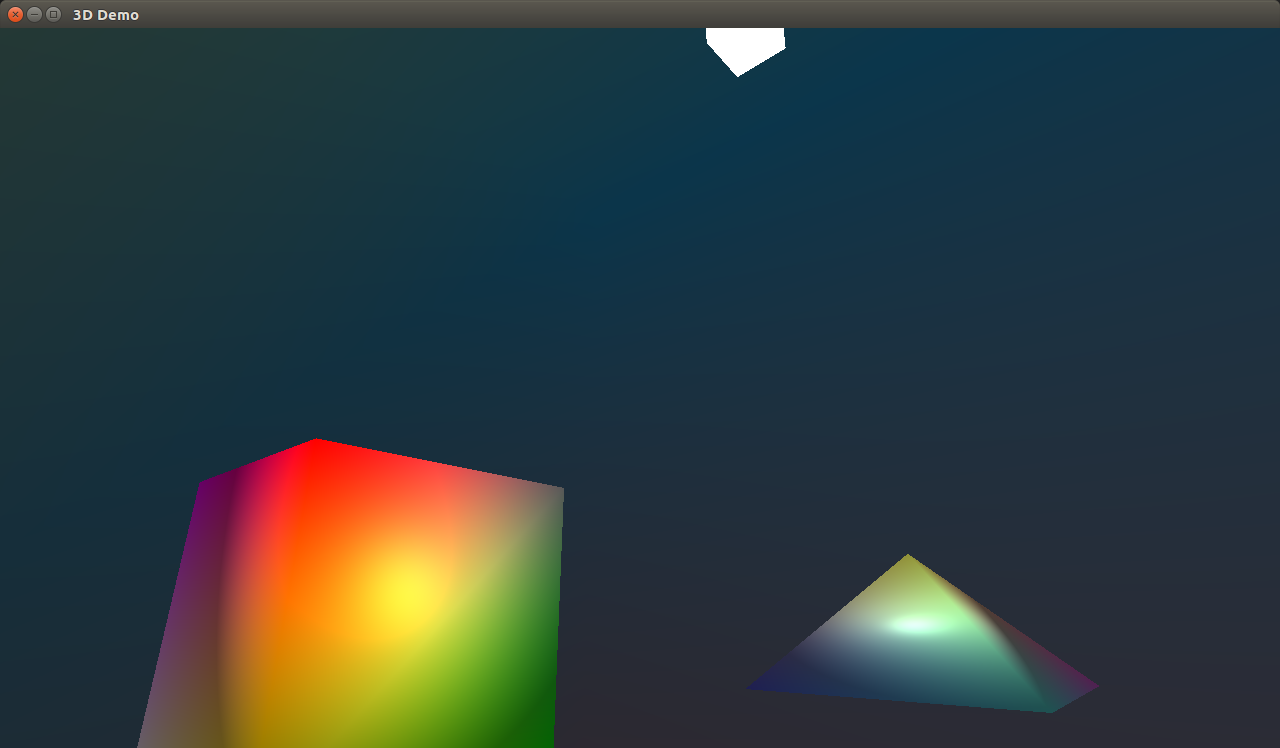

Light effect with attenuation:

The code:

/* fragment shader */

in vec3 vs_fs_position; // input fragment position

in vec4 vs_fs_color; // input fragment color

out vec4 color; // output color

/* ... */

float distance = length(light_position - vs_fs_position);

float attenuation = 1.0f / ( constant + light_linear * distance + light_quadratic * pow(distance, 2) );

vec3 final_effect = (light_ambient + diffuse_effect + specular_effect) * attenuation;

color = final_effect * vs_fs_color;

Object Rotation Under Light

Take object rotation into consideration. That means the position and the normal of the fragment change according to the rotation.

The code:

/* vertex shader */

in vec3 in_position;

in vec3 in_normal;

in vec4 in_color;

out vec3 vs_fs_position;

out vec3 vs_fs_normal;

out vec4 vs_fs_color;

// The model rotation matrix.

uniform mat4 model_rot_matrix;

// The model transform matrix.

uniform mat4 model_matrix;

/* ... */

vs_fs_position = model_matrix * in_position;

vs_fs_normal = model_rot_matrix * in_normal;

vs_fs_color = in_color;

Gourand Lighting and Phong Lighting

When the Phong lighting model is implemented in the vertex shader, it's called Gourand lighting. When implemented in the fragment shader, it's called Phong lighting.

The difference between them is that there are way more fragments in the fragment shader comparing to the vertices in the vertex shader due to interpolation. So Phong lighting gives much smoother lighting results than Gourand lighting does when there are the same number of vertices.